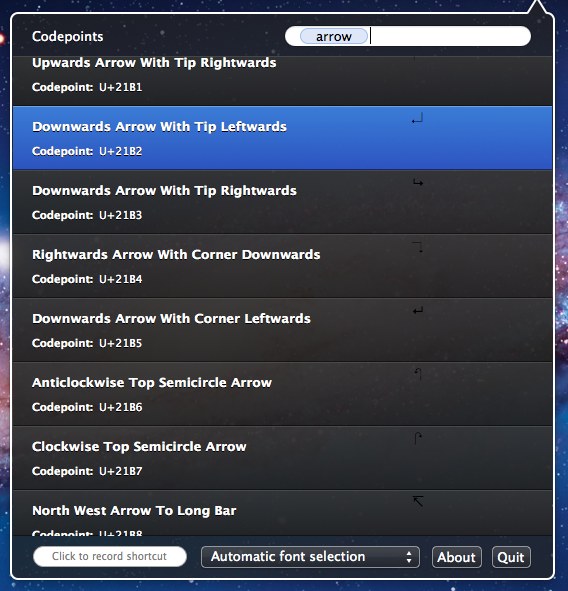

Earlier today I got a support email from a customer who found a bug few people are likely to hit in Codepoints, causing it to render characters small and in black:

Both of those font rendering characteristics, size and color, are actually hardcoded in the app to be fairly large and white, which led me to believe there was something funny about the fonts on the system.

As it turns out, the issue is caused by a font that was intentionally disabled by the user, and by chance it was the one font that Codepoints (and OS X itself) relies on to render a large number of Unicode glyphs, because it is the only one that ships with OS X that can render many of them: Arial Unicode MS.

I'm preparing to update Codepoints to deal with situations like that, but I thought I would write a little about how font rendering in OS X actually works, and how Codepoints handles it.

Font rendering in OS X

The Unicode 6.1 specification has 24,428 records in the database, however no single preinstalled font on OS X can render all of them. In fact, even when taken as a whole, OS X does not have font glyphs to render them all, and installing a 3rd party Unicode font is needed to get full coverage (GNU Unifont is common and free).

To deal with this, most operating systems use fallback behavior: they first try to render a character with the default or user selected font, and then cycle through other installed fonts until they either find a suitable character glyph, or use the Last Resort font, or give up entirely, which tends to look like a question mark or a black box.

How Codepoints determines what to display

When automatic font selection is turned on in Codepoints, what the app does as of 1.0.2 is select Arial Unicode MS as the main font to render things with, but will allow OS X to use the fallback behavior to render characters that Arial Unicode MS doesn't have a character glyph available for. This ensures that most character previews are rendered by the same font, giving some visual consistency to the list when scrolling, while still allowing as many characters as possible to be displayed.

Selecting a specific font in the drop down menu is when things get interesting. Rather than allowing OS X to render a character glyph with fallback behavior, Codepoints determines whether or not the users selected font can render a specific character glyph, and by doing this it becomes possible to completely disable the fallback behavior. If you select Helvetica, you will not see a character glyph in the preview area at all if Helvetica cannot render it.

The goal there is to not have the interface "lying" to the user, if you select a specific font, but then Codepoints allows a character to be rendered by something else, it would be misleading.

Similarly, if Codepoints detects that the LastResort font is about to be used to render a character glyph, Codepoints disables rendering for that row, as the character glyph it would display is not accurate.

If you want to know the reason a specific character was rendered or not rendered, you can mouse over the right side of the row and a tooltip will appear. Most of the time this tooltip is accurate, however there are some edge cases where a font claims to be able to render a character and Codepoints trusts it, but then the font doesn't actually return a glyph. You can sometimes see this in the higher Emoji range when the Apple Color Emoji font gets a little overzealous, but also with some obscure symbols in the lower range.

I haven't decided whether or not to actually switch the default font yet, as I need to do some testing to see what exactly happens when you disable major fonts like this, however I do plan to deal with it in 1.0.x :)